Projects

Tools for Open and Reproducible Neuroscience

In this DFG core facility project we develop tools and practices for open neuroimaging. Our approaches build on the Brain Imaging Data Standard, a community developed standard for data storage and meta-data. Using such data structure facilitates collaborative research since there is no need to explain the data due to its commonly known structural organization. Moreover it eases the sharing of data and allows for meta analyses and big data approaches, which are referred to as gold-standard scientific practices. Since data organization is such an important and fundamental topic within the open science community our projects are centered around BIDS.

In addidtion we provide accessble overviews of topics relevant for open neuroimagung (and beyond) like the most comprehensive review paper on tools and practices currently available and an overview of privacy regulations in the three juridictions wih the largest amounts of research data (Europe, USA, China)

- ancpBIDS: ancpBIDS is a lightweight python library to read/query/validate/write BIDS datasets. It provides a unified and modality agnostic I/O interface for researchers to handle BIDS datasets in their workflows or analysis pipelines. Its implementation is based on the BIDS schema and allows to evolve with the BIDS specification in a generic way.

- BIDS Conversion GUI: A first obstacle for useful data sharing is that data are not saved or follow an idiosyncratic structure. We currently develop a user-friendly GUI for data conversion to BIDS including capabilities for metadata annotation to make research data FAIR.

- MEGqc: Magnetoencephalographic and electroencephalic data is prone to many sources of noise or artifacts degrading data quality. Unfortunately data quality is rarely reported. This tool is based on BIDS and performs automated quality assessment with human and machine readable output.

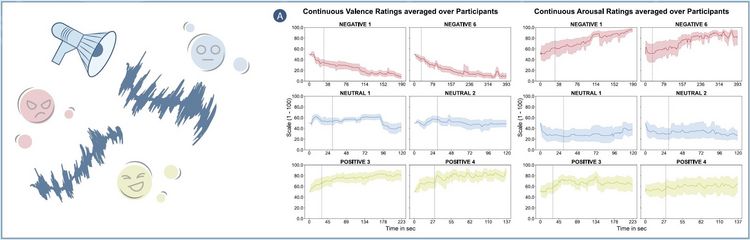

- GAUDIE: a thoroughly validated naturalistic speech stimulus databases for emotion induction

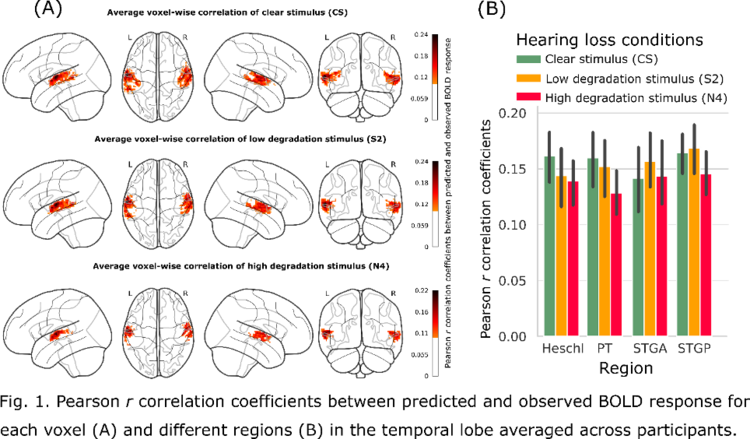

- M-CCA: as a toool for optimized functional inter-individual data combination. This approach is constatly beeing further improved and validated.