Since the launch of the new chatbot ChatGPT, artificial intelligence has become a major topic in the media. Many are excited by the possibilities the platform offers. It gives intelligent answers to all kinds of questions and can write long and informative texts on every conceivable subject. But there are also many critical voices. In this interview, computer scientist Oliver Kramer talks about the opportunities presented by the new technology and to what extent it can advance digitalisation.

Prof. Kramer, will ChatGPT trigger a revolution in artificial intelligence (AI)?

The technology is very interesting because it makes AI faster and more powerful. It is the latest of several developmental leaps in AI in the last 20 years. I'm sure it will change our lives and the way we work, but I wouldn't talk of a revolution.

Just to be clear, what exactly do experts mean by the term AI?

AIs are computer programmes that can learn. They're used for things like image recognition. These programmes are trained using vast amounts of data so that they learn to recognise certain objects – faces, for instance. This is also known as machine learning. ChatGPT is what is known as a Large Language Model, which has been fed millions of texts on all kinds of subjects. As a result, it contains a large part of the world's knowledge.

And what does it do with it?

ChatGPT and other AI systems basically do nothing more than map learned data onto other data. A simple example would be translating a German text into French. We computer scientists call this "sequence to sequence learning" – in this case a sequence of German words is mapped onto a sequence of French words. But the resulting translation is not particularly intelligent. ChatGPT adds a whole new aspect called "attention" or "self-attention" – a concept introduced by a team of computer scientists in 2017 in the seminal paper "Attention Is All You Need". With this approach, an AI is made capable of independently assessing which part of an input is important for the desired response and which isn't. This means that the AI has the capacity to pay attention and take context into consideration, even with longer queries. It's very similar to information processing in humans. Thanks to our ability to pay attention, we also weigh up which information is important before taking the next step.

Can you give an example?

Let's say you're chatting to ChatGPT about New York. If you then ask a question like: "Where is the coach station?", ChatGPT will take the context of the conversation into account and tell you the location of the coach station in New York – rather than in another city, for example. A simple voice assistant is not able to do this.

Have you already had experiences with this new type of AI?

Yes, we used these new attention mechanisms in a project aimed at developing drugs against coronaviruses. The project was focused on blocking an enzyme – a protease –

that cuts certain proteins when the viruses reproduce. The goal was to find a new drug molecule that would paralyse the protease's cleavage mechanism. We worked with different AI methods. First, we used evolutionary algorithms, which have been established for some time now, to modify molecules from chemical databases via an evolutionary process and make them dock onto the protease and deactivate it. Then we used the new AI method to integrate new proposed molecules into the optimisation process. In this way, individual molecules were continually improved in a kind of optimisation loop. We also used mainframe computers for this optimisation process. With these computers it takes two days to calculate a molecule.

Did you find promising drugs against Covid?

Yes, we did. We had to take several things into account, ensuring not only that the molecule inhibited the protease, but also that it was well tolerated and that it wasn't too difficult to produce in the lab. This type of complex compound has to be synthesised atom by atom. It takes up to half a year to get a production process like this up and running. The simpler the structure of the drug molecule, the faster this goes. This example shows how versatile AI is today. Many other research groups at the University's Department of Computer Science are also using AI –

Astrid Nieße is using it to optimise the operation of charging stations for electric cars, for example, and my colleague Daniel Sonntag is working on making it easier for humans and robots to work together. Many different AI technologies are used in these projects.

But systems like ChatGPT and the "attention principle" seem to be the dominant topic at the moment.

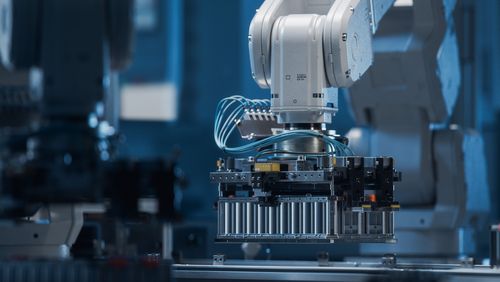

Of course. After all, it paves the way for completely new applications. The one-armed industrial robot PaLM-E which was developed by Google and the Technische Universität Berlin is a prime example. Like ChatGPT, this robot is equipped with a Large Language Model which allows it to access the world knowledge contained in the model. Normally, you have to show robots what to do step by step. PaLM-E, by contrast, makes creative use of its knowledge and ability to reason. For example, it opens drawers to look for tools because it knows, thanks to its world knowledge, that tools are often stored in drawers.

In spite of all the enthusiasm, ChatGPT has also prompted warnings about the dangers of AI. How dangerous is it?

Critics often say that we computer scientists can no longer explain or keep track of what algorithms actually do – that AI is essentially taking on a life of its own. This is true to the extent that like neural networks, AI methods do indeed operate independently. That's the whole point. They can solve far more complex tasks than we humans can. A human being can perhaps combine three or four different parameters, but beyond that we lose track. A neural network can combine dozens of parameters and identify connections that we humans would never discover. But that doesn't mean we are losing control. After all, we train the AI using specific information to solve specific problems. And generally, AI is only put into use in everyday life once it has been sufficiently tested – as with automated driving, for example.

So you're saying we don't need to worry about the risks of AI?

Naturally there are also risks. If systems like ChatGPT are able to write entire essays, then as a university lecturer I have to ask myself how I can make sure that my students don't cheat. Since the launch of ZeroGPT a few months ago, we have a software that can check texts to see if they were written by AI. But such systems can also be tricked. Systems like ChatGPT clearly pose challenges. It's entirely possible that jobs in service centres will be lost because ChatGPT can provide very creative answers to many questions. Such systems will also be able to take over certain types of text work in sectors like the advertising industry. But this can also be an advantage if work processes become more efficient as a result. In the meantime, new jobs like that of "prompter" are emerging. A prompter is responsible for writing particularly apt and effective AI prompts so that these systems generate texts with more substance. Basically, we're having the same discussion as we always do when a new AI technology comes onto the market. And the answer is also always the same: a new technology can have disadvantages, but also great potential.

What potential do you see?

What really interests me is combining Large Language Models like the ones ChatGPT is based on with other data sets – image data and videos, or information about diseases or chemical molecules. Perhaps in the future we'll be able to ask questions like: "What is the perfect molecule to inhibit the protease in the coronavirus?". That could save a lot of time. In one of our new projects we're combining a neural network with the new attention mechanism as well as with data from hundreds of wind turbines – with the goal of improving short-term weather forecasts. We want to be able to use the current electricity production data from the many turbines on a wind farm to reliably predict the electricity yield expected from that farm in the next hour. With the expansion of renewables, these short-term forecasts are becoming more and more vital for operating power grids. And this approach can improve short-term forecasts significantly.

The digitalisation of industry and of society as a whole has been high on the political agenda in both Germany and Europe for years. To what extent can the new AI advance digitalisation?

For me, these are two separate worlds. When I think of digitalisation I think of authorities and administrations that are still barely digitalised today. The process begins with digitising correspondence – as pdf documents, for example. The electronic patient file, which is to be introduced nationwide in 2024, is also a step towards digitalisation. It will make the exchange of data between doctors, clinics and patients much easier. Up to now, however, many digitalisation activities have failed due to security concerns. Data security is certainly important, but it often becomes a hurdle when it comes to digitalisation. There's still a long way to go before artificial intelligence comes into play here. Yet especially when it comes to electronic patient records, AI could be very helpful. AI programmes could be used to analyse patient data and identify health risks or potential diseases that would otherwise go undetected, for example.

Interview: Tim Schröder

The article was first published in the research magazine EINBLICKE.