Current topics

Current topics

From active, non-linear auditory processes and numerical models of hearing to internet transmission of music, listening with hearing aids or objective speech quality evaluation for mobile phones - hearing acoustics has a direct impact on our daily lives, not only for people with hearing loss or communication problems at noisy locations. The challenges in ensuring and improving acoustic communication in complex acoustic conditions are manifold and are scientifically addressed in a multi-disciplinary approach. From a physical perspective, the challenges lie in analyzing the effective function of the ear as well as the interaction between interlocutors during acoustic communication and in transforming this analysis into a model of hearing. This complex systems approach opens up a wide range of technical applications.

Auditory Scene Analysis:

How do we get an acoustic picture of our surroundings?

Most of hearing takes place subconsciously. Almost everyone is oblivious of the complex processing in the ear and brain that transforms sound waves into "heard" information. One of the biggest mysteries is the fact that humans are able to filter out the voice of a talking person from a variety of sound sources (other persons, barking dogs, passing cars ...). With healthy persons, scene analysis works flawlessly. With hard-of-hearing persons, however, this is different. They usually can communicate rather effortlessly when no other distracting sound sources are present, but have marked difficulties to communicate in noisy conditions. Hearing aids can restore this ability only to some extent, since complex scene analysis and signal restoration processes have not yet been successfully replicated technically.

Imagine a cocktail party: A buzz of voices, clinking of glasses, subtle music. Everyone is talking, in pairs or in larger groups - but some people don’t understand what their interlocutor says. Trying to lip read what is being said is futile, as the ear and brain are not able to cope with the complex acoustic environment. About fifteen percent of all Germans suffer from inner-ear hearing loss. The trend is increasing, because life expectancy in our society is rising continuously and hearing loss is a typical age-related problem. Solving the cocktail-party problem and improving hearing aids will therefore become even more important in the future. The goal of our research into Auditory Scene Analysis is to investigate scene analysis algorithms that may be used as buildings blocks in future hearing devices to improve sound processing in complex listening conditions.

Josupeit, A., & Hohmann, V. (2017). Modeling speech localization, talker identification, and word recognition in a multi-talker setting. J. Acoust. Soc. Am, 142(1), 35-54. doi.org/10.1121/1.4990375

Josupeit, A., Kopčo, N., & Hohmann, V. (2016). Modeling of speech localization in a multi-talker mixture using periodicity and energy-based auditory features. J. Acoust. Soc. Am., 139(5), 2911–2923. doi.org/10.1121/1.4950699

Dietz, M., Ewert, S. D., & Hohmann, V. (2011). Auditory model based direction estimation of concurrent speakers from binaural signals. Speech Communication, 53(5), 592–605. doi.org/10.1016/j.specom.2010.05.006

Kayser, H., Hohmann V., Ewert S. D., Kollmeier B., Anemüller J., (2015). Robust auditory localization using probabilistic inference and coherence-based weighting of interaural cues. The Journal of the Acoustical Society of America, 138(5), 2635 – 2648

Digital hearing aids

The first hearing aid processing acoustic signals digitally was presented in 1996. Despite the fact that digital technology was already widely spread, the possibility to fit a processing unit and digital circuitry into the confined space of a hearing aid, with minimal energy consumption, came as a surprise for the hearing aid industry. Not even mobile phones, which were regarded as a marvel of technology, had this efficiency. Thus, the introduction of the digital hearing aid can be regarded as a technological leap. Since then, hearing aids have been in constant development and producers meanwhile offer fully digital hearing aids that allow for a complex processing of acoustic signals. The available processing power advances almost as fast as the CPU performance found in home computers.

Computers (e.g. hearing aids) can not imitate the abilities of the human ear yet. A hearing aid that uniformly amplifies all acoustic signals does not help in a cocktail party situation. In fact, it has to separate auditory objects and selectively amplify them. It has been shown that, apart from the ear's high selectivity for sounds of different frequency/pitch, amplitude modulation (fast sound level fluctuations) and sound localization are important mechanisms for object separation. Sound localization is strongly linked to binaural hearing (hearing with two ears).

The goal of our hearing-aid research is to investigate and evaluate novel algorithms that may improve acoustic communication in complex listening conditions.

Chen, Z., & Hohmann, V. (2015). Online monaural speech enhancement based on periodicity analysis and a priori SNR estimation. Audio, Speech, and Language Processing, IEEE/ACM Transactions On, 23(11), 1904–1916.

Grimm, G., Herzke, T., Berg, D., & Hohmann, V. (2006). The master hearing aid: A PC-based platform for algorithm development and evaluation. Acta Acustica United with Acustica, 92(4), 618–628.

Luts, H., Eneman, K., Wouters, J., Schulte, M., Vormann, M., Buechler, M., Dillier, N., Houben, R., Dreschler, W., Froehlich, M., Puder, H., Grimm, G., Hohmann, V., Leijon, A., Lombard, A., Mauler, D. & Spriet, A. (2010). Multicenter evaluation of signal enhancement algorithms for hearing aids. The Journal of the Acoustical Society of America, 127(3), 1491–1505. doi.org/10.1121/1.3299168

Ecological validity, user behavior and hearing device benefit

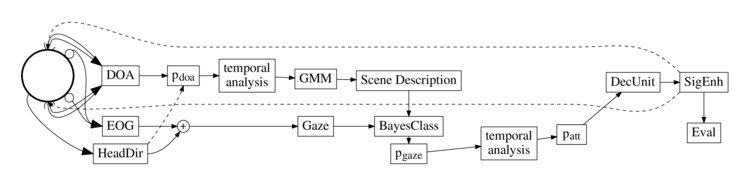

Hearing devices are typically worn on the head. Therefore, the functioning of signal enhancement algorithms and the benefit of these algorithms for the hearing device user interacts with head movement. Furthermore, it is often not possible to decide which of the acoustic sources in an acoustic environment are relevant to the user and which are not, based on acoustic information alone. Future signal enhancement algorithms could therefore analyze the user’s motion behavior to gain further insight into the hearing needs and enhance only relevant sources while attenuating others.

To assess the interaction effects between motion behavior and current and future signal enhancement algorithms, evaluation methods are needed in which test participants exhibit ecologically valid motion behavior. Ecological validity in this context refers to the "degree to which research findings reflect real-life hearing-related function, activity and participation" (Keidser et al., 2020).

To enable the development of such evaluation and interaction methods, we have established a virtual reality lab, our "Gesture Lab". In this laboratory we combine high-resolution interactive spatial audio playback with state-of-the-art body sensing technologies and 300-degree field-of-view video projection. Our goal is to develop test paradigms and interaction methods in the lab that are representative of real-life situations.

Hendrikse, M. M. E., Llorach, G., Hohmann, V., & Grimm, G. (2019). Movement and Gaze Behavior in Virtual Audiovisual Listening Environments Resembling Everyday Life. Trends in Hearing, 23, 1–29. doi.org/10.1177/2331216519872362

Grimm, G., Hendrikse, M. M. E., & Hohmann, V. (2020). Review of Self-Motion in the Context of Hearing and Hearing Device Research. Ear & Hearing, 41(Supplement 1), 48S-55S. doi.org/10.1097/AUD.0000000000000940

Keidser, G., Naylor, G., Brungart, D. S., Caduff, A., Campos, J., Carlile, S., Carpenter, M. G., Grimm, G., Hohmann, V., Holube, I., Launer, S., Lunner, T., Mehra, R., Rapport, F., Slaney, M. & Smeds, K. (2020). The Quest for Ecological Validity in Hearing Science: What It Is, Why It Matters, and How to Advance It. Ear & Hearing, 41(Supplement 1), 5S-19S. doi.org/10.1097/AUD.0000000000000944

Hohmann, V., Paluch, R., Krueger, M., Meis, M., & Grimm, G. (2020). The Virtual Reality Lab: Realization and Application of Virtual Sound Environments. Ear & Hearing, 41(Supplement 1), 31S-38S. doi.org/10.1097/AUD.0000000000000945

Open tools for hearing research

To facilitate our research and to advance reproducible science, we developed several research tools and contributed to others. The two most important tools in our lab are the Open Community Platform for Hearing Aid Algorithm Research “open Master Hearing Aid” (openMHA) and the Toolbox for Acoustic Scene Creation and Rendering (TASCAR), but also contributions to the Auditory Modeling Toolbox (AMT).

openMHA is a software platform for hearing device signal processing. A number of state-of-the-art algorithms commonly used in hearing devices are implemented in openMHA. These include multiband dynamic compression, directional filtering , and monaural and binaural noise reduction. The software can run on Linux, MacOS and Windows. A special portable hardware, the Portable Hearing Lab (PHL), has been developed to enable experiments in the field. See www.openmha.org for further information.

TASCAR is used to create interactive virtual acoustic and audiovisual environments for hearing science. The software focuses on low-delay interaction in virtual reality. A low-order geometric image source model is combined with a diffuse sound field rendering model, e.g. for background noise and diffuse reverberation. In addition, TASCAR offers a variety of extensions for laboratory integration, e.g. data logging, integration of motion sensors and real-time control of game engines for rendering visual components. See tascar.org/ for further information.

Kayser, H., Herzke, T., Maanen, P., Zimmermann, M., Grimm, G., & Hohmann, V. (2022). Open community platform for hearing aid algorithm research: open Master Hearing Aid (openMHA). SoftwareX, 17, 100953. doi.org/10.1016/j.softx.2021.100953

Grimm, G., Luberadzka, J., & Hohmann, V. (2019). A Toolbox for Rendering Virtual Acoustic Environments in the Context of Audiology. Acta Acustica United with Acustica, 105(3), 566–578. doi.org/10.3813/AAA.919337

Kayser, H., Pavlovic, C., Hohmann, V., Wong, L., Herzke, T., Prakash, S.R., Hou, Z., Maanen, P. (2018). Open portable platform for hearing aid research, American Auditory Society's 45th Annual Scientific and Technology Conference, Scottsdale, Arizona