External Microphone in Dynamic Scenarios

External Microphone in Dynamic Scenarios

RTF-steered binaural MVDR beamforming incorporating an external microphones for dynamic acoustic scenarios

Nico Gößling, Simon Doclo

Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, May 2019

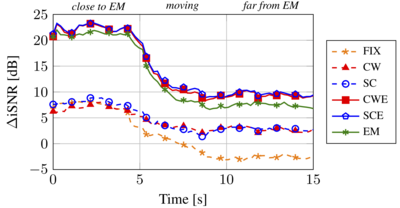

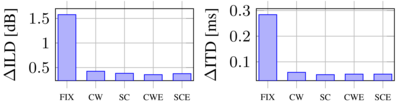

A well-known binaural noise reduction algorithm is the binaural minimum variance distortionless response beamformer, which can be steered using the relative transfer function (RTF) vectors of the desired source. In this paper, we consider the recently proposed spatial coherence (SC) method to estimate the RTF vectors, requiring an additional external microphone that is spatially separated from the head-mounted microphones. Although the SC method provides a biased estimate of the RTF between the head-mounted microphones and the external microphone, we show that this bias is real-valued and only depends on the SNR in the external microphone. We propose to use the SC method to estimate the extended RTF vectors that also incorporate the external microphone, enabling to filter the external microphone signal in conjunction with the head-mounted microphones. Evaluation results using recorded signals of a moving speaker in diffuse noise show that the SC method yields a slightly better performance than the widely used covariance whitening method at a much lower computational complexity.

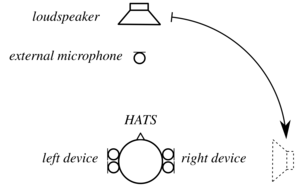

- 4 microphones of two BTE hearing devices mounted to the ears of a KEMAR dummy head

- 1 external microphone (1.5 m distance to head, 0.5 m distance to loudspeaker)

- Recorded in the Variable Acoustics Laboratory at the University of Oldenburg

- Reverberation time of about 350 ms

- Sampling rate 16 kHz

- STFT framework using a 32 ms square-root Hann window with 50% overlap

- Moving loudspeaker

- Pseudo-diffuse noise generated by four loudspeakers in the corners of the laboratory

- On-line implementation of the RTF-steered binaural MVDR beamformer using different RTF vector estimation methods (see paper for details)

Sound examples (listen via headphones)

Input signals:

| Hearing device inputs (reference microphones): | |

| External microphone (EM): |

Output Signals:

| Fixed anechoic RTFs (FIX): | |

| Covariance whitening method, not filtering the ext. mic (CW): | |

| Spatial coherence method, not filtering the ext. mic (SC): | |

| Covariance whitening method, filtering the ext. mic (CWE): | |

| Spatial coherence method, filtering the ext. mic (SCE): |