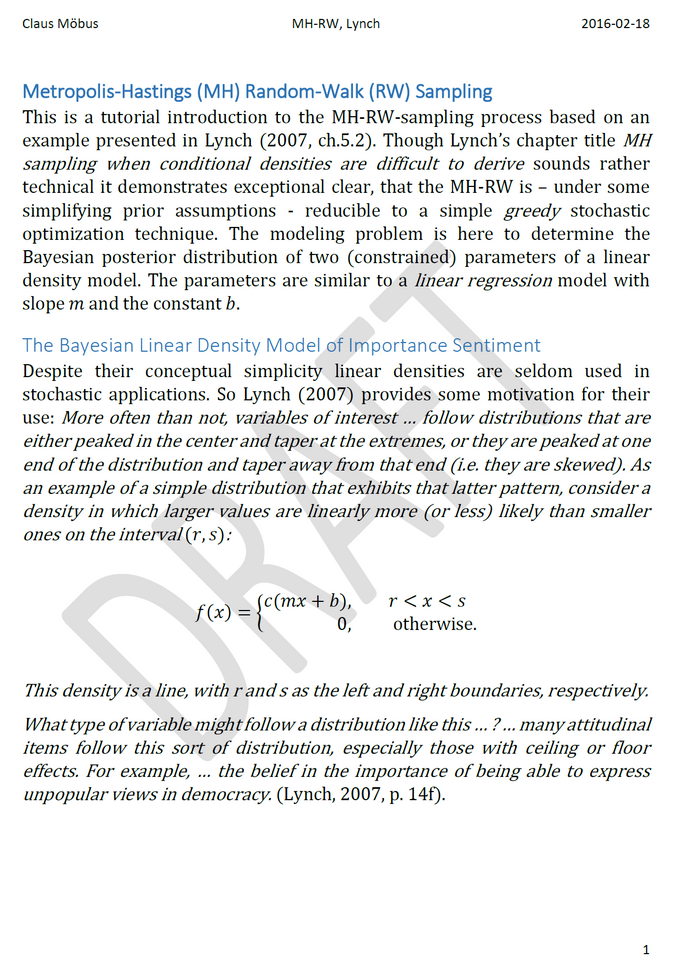

Metropolis-Hastings-Sampling

Metropolis-Hastings-Sampling

Metropolis-Hastings Random-Walk Sampling (MHRW)

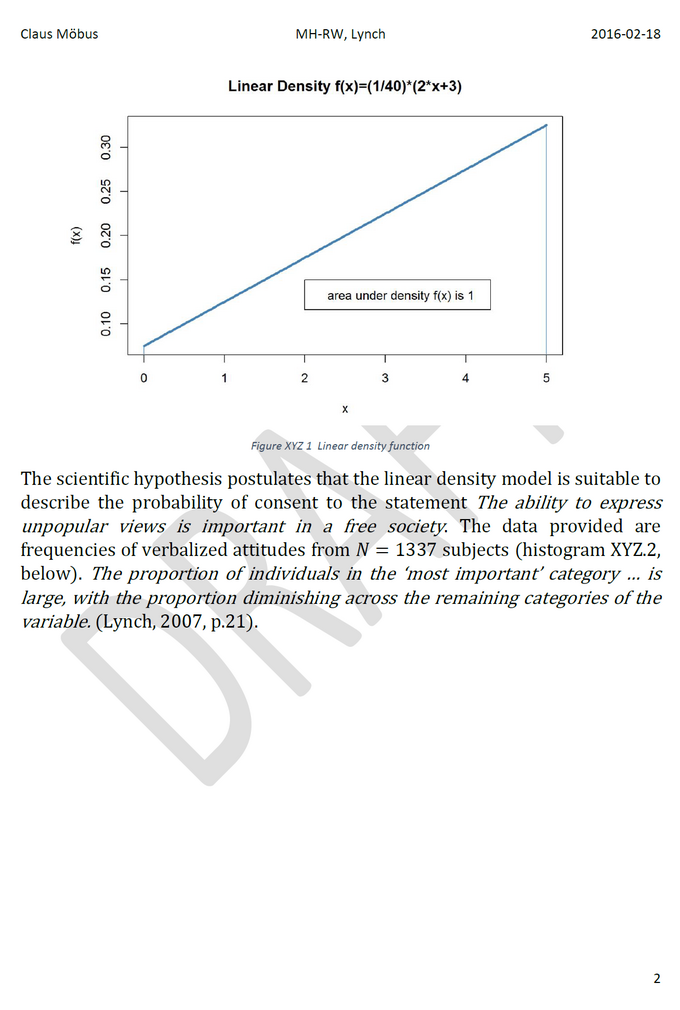

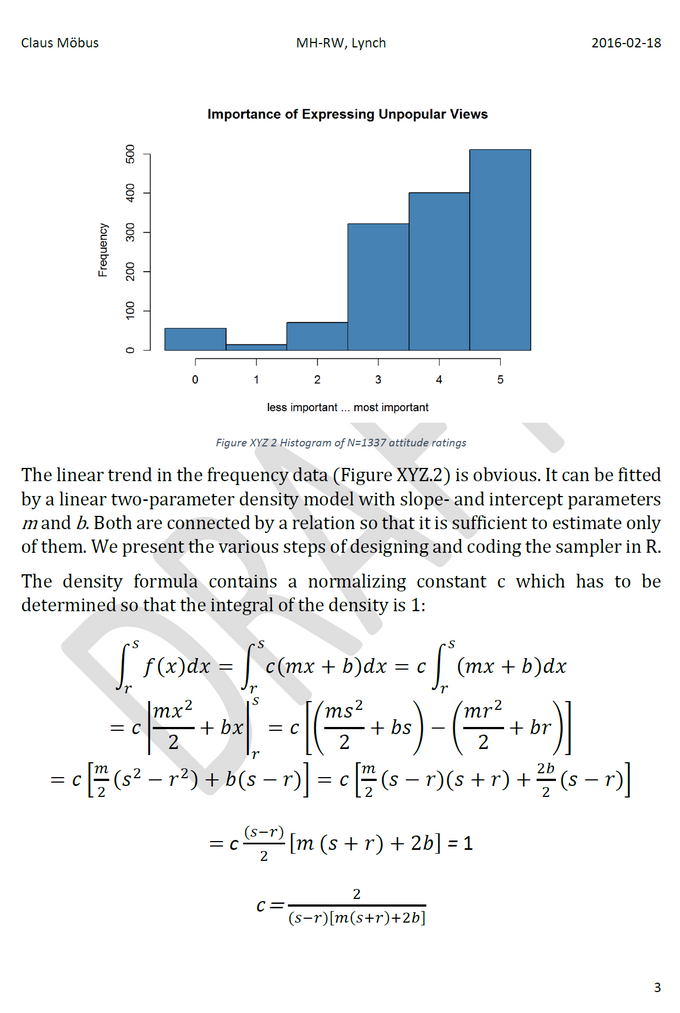

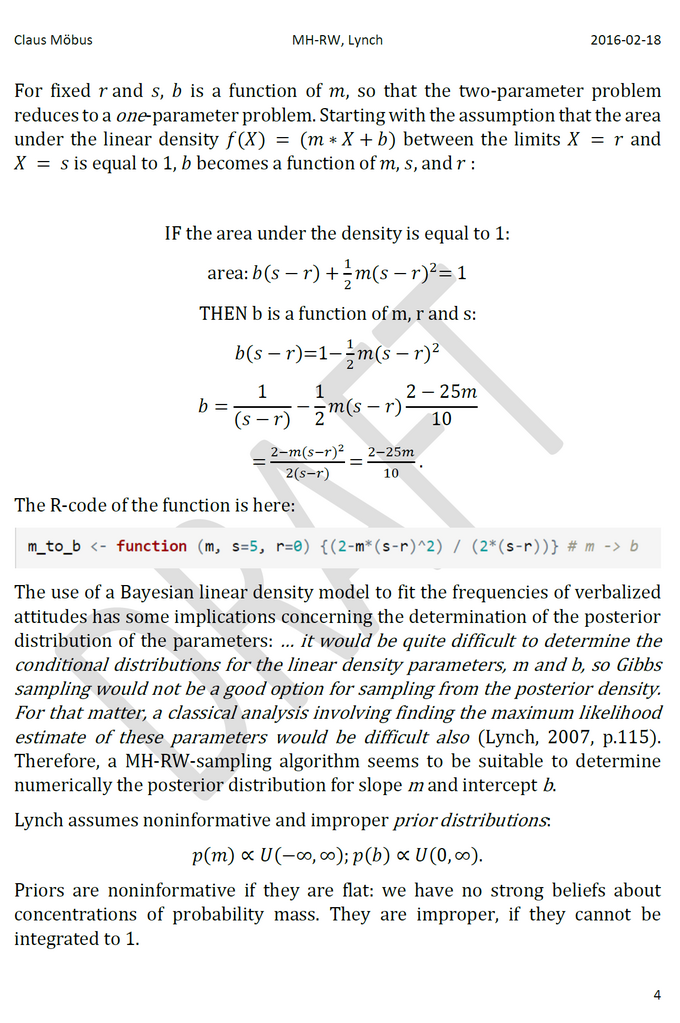

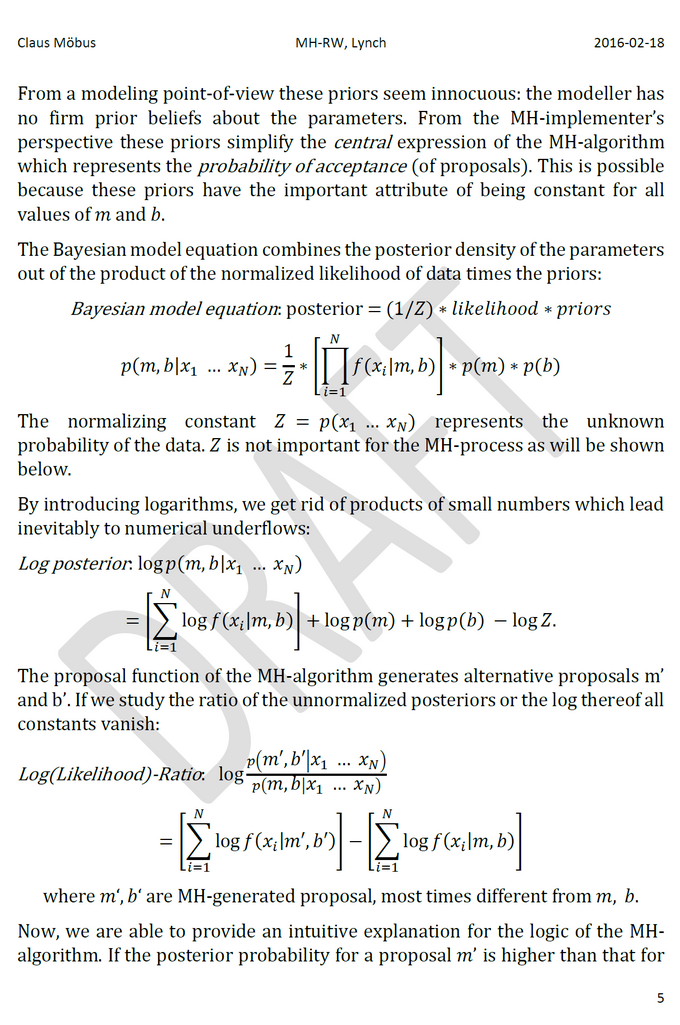

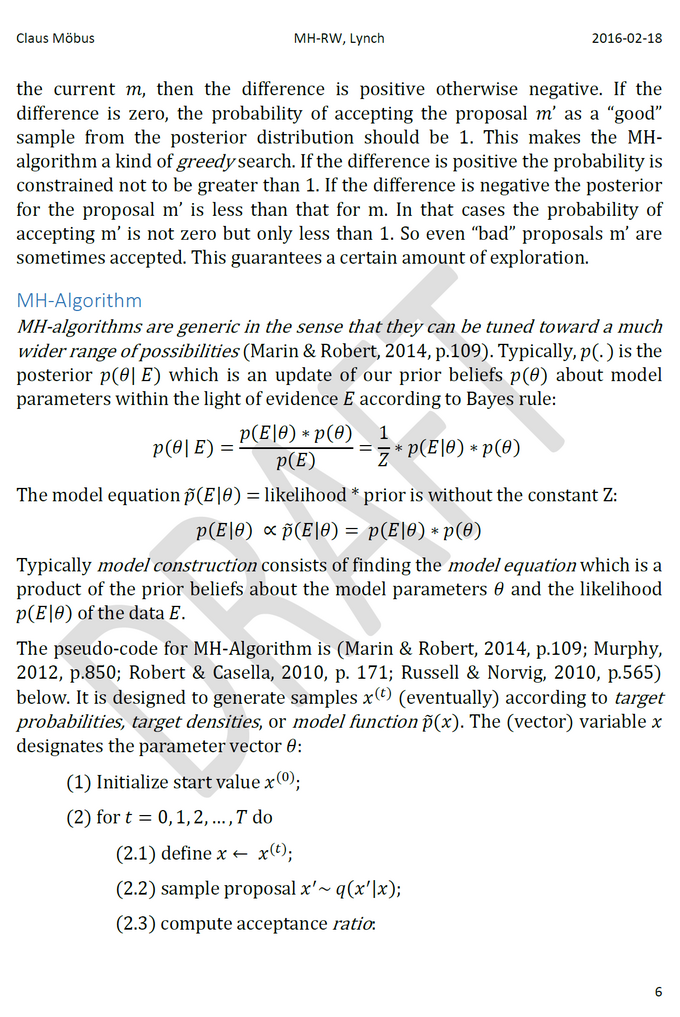

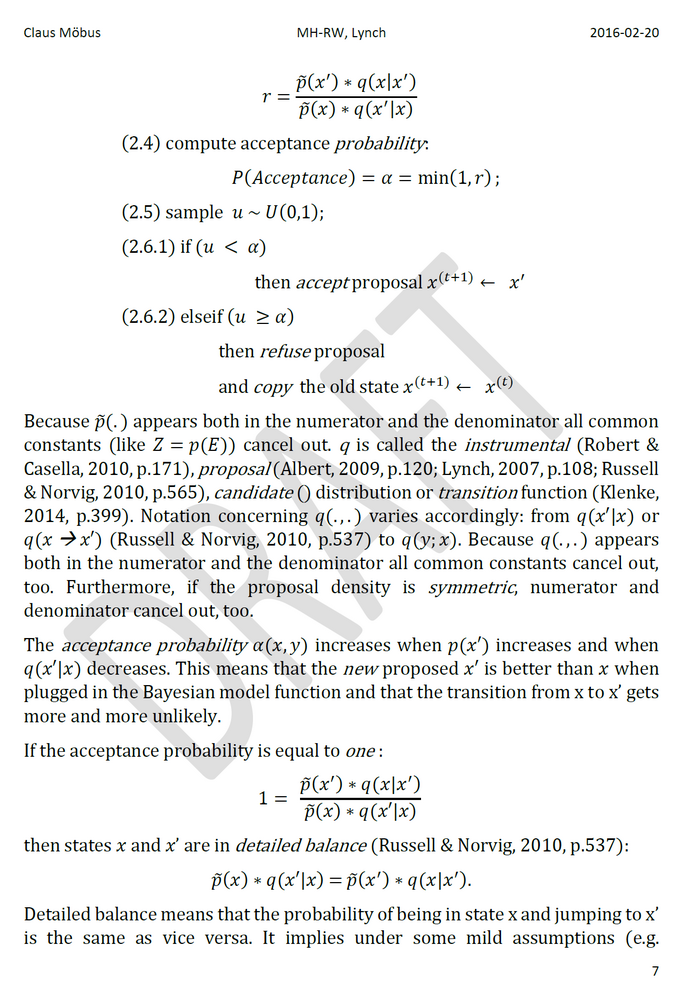

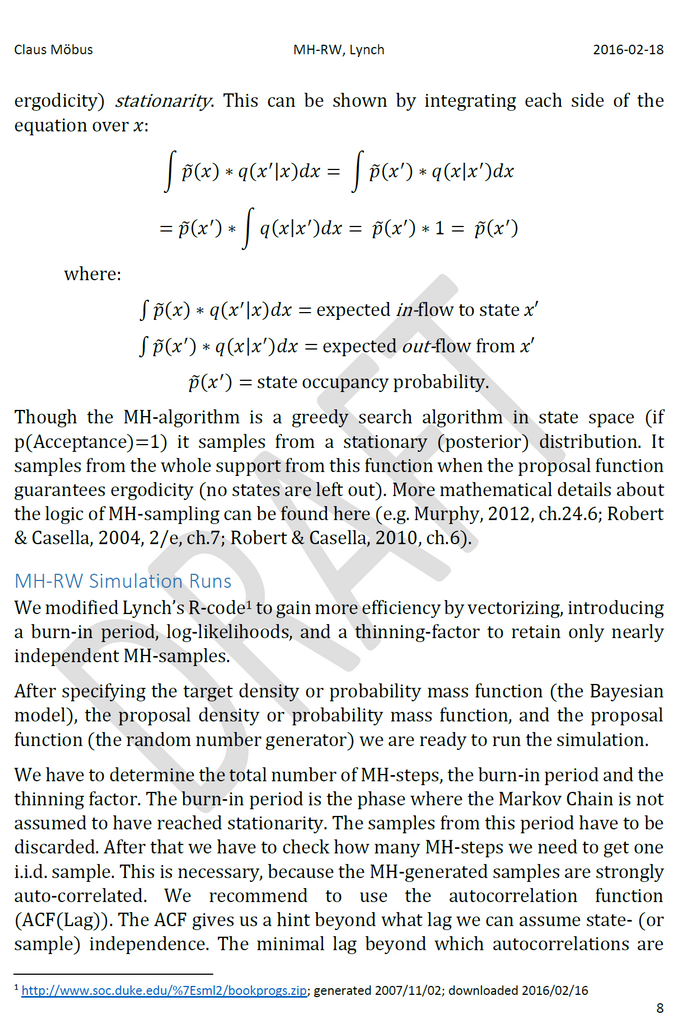

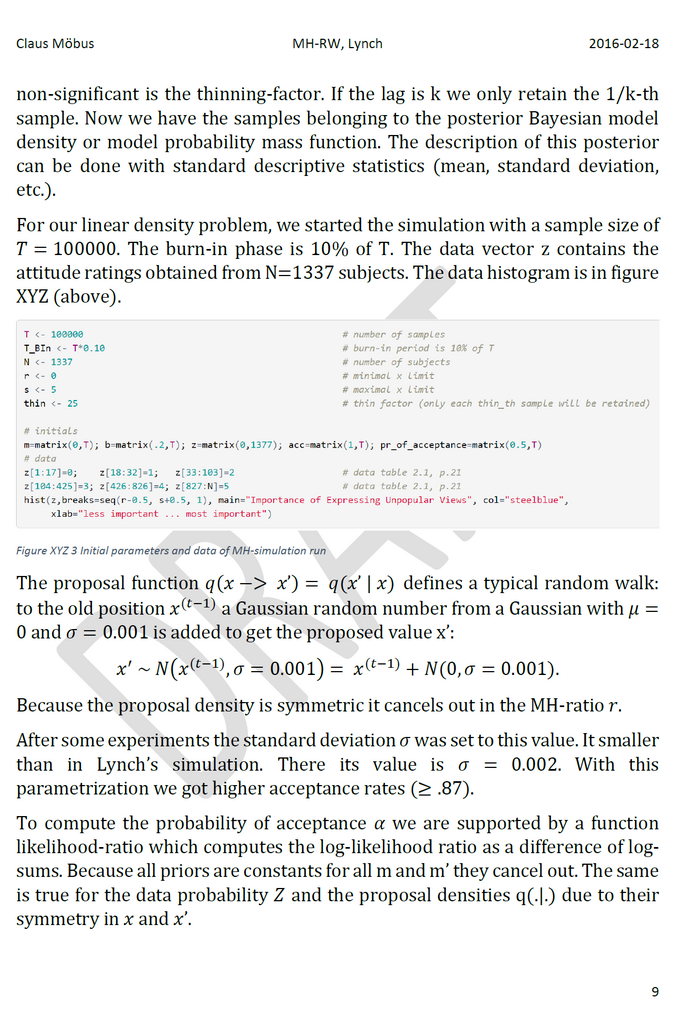

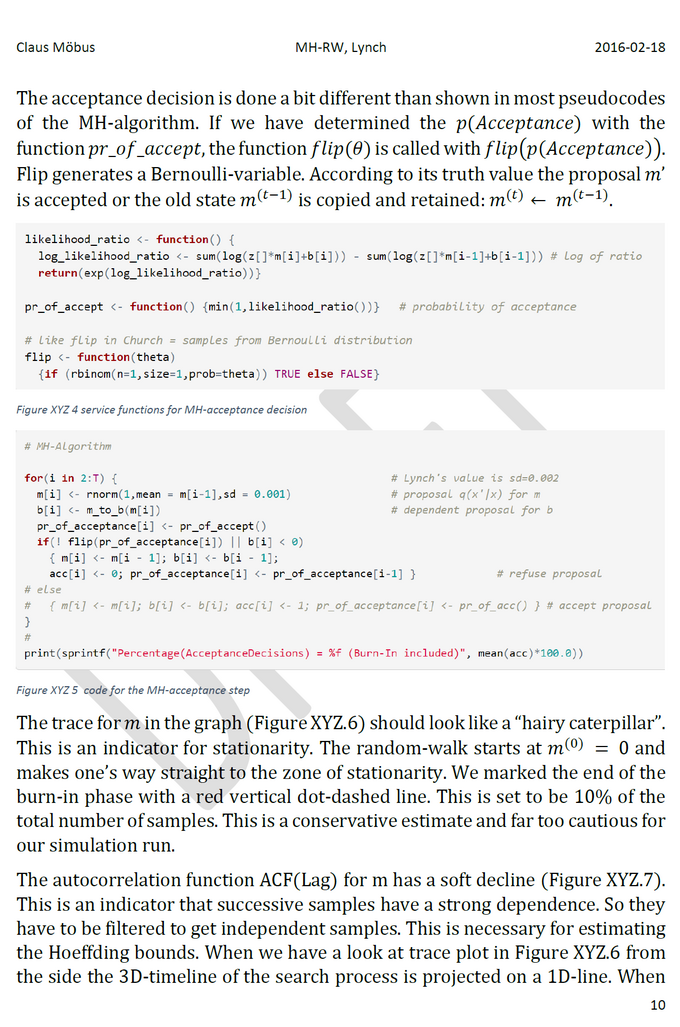

We present a tutorial introduction of the sampling process which is inspired by Lynch (2007, ch.5.2). His example demonstrates exceptional clear, that the MHRW is – under some apriori assumptions - reducible to a simple greedy stochastic optimization procedure working on a likelihood-ratio. We present the various steps of designing and coding the sampler in R. We vectorized some parts of Lynch’s code so that there is a considerable speed-up. Furthermore we added the deletion of the burn-in period and a possibility to thin the Markow chain to get rid of autocorrelations in the sampling process.

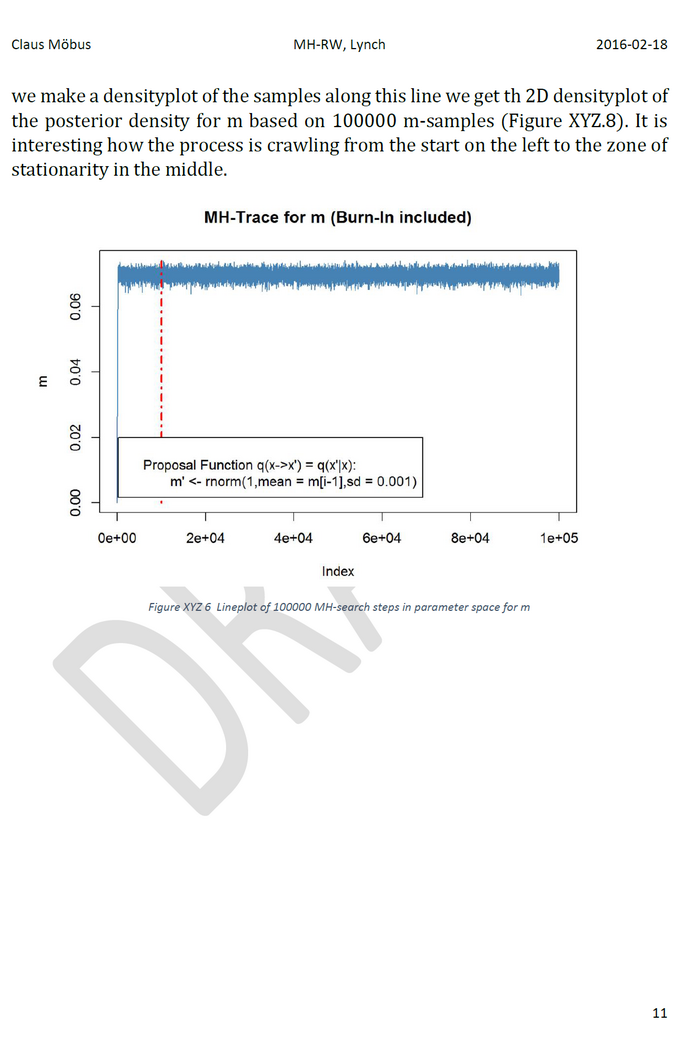

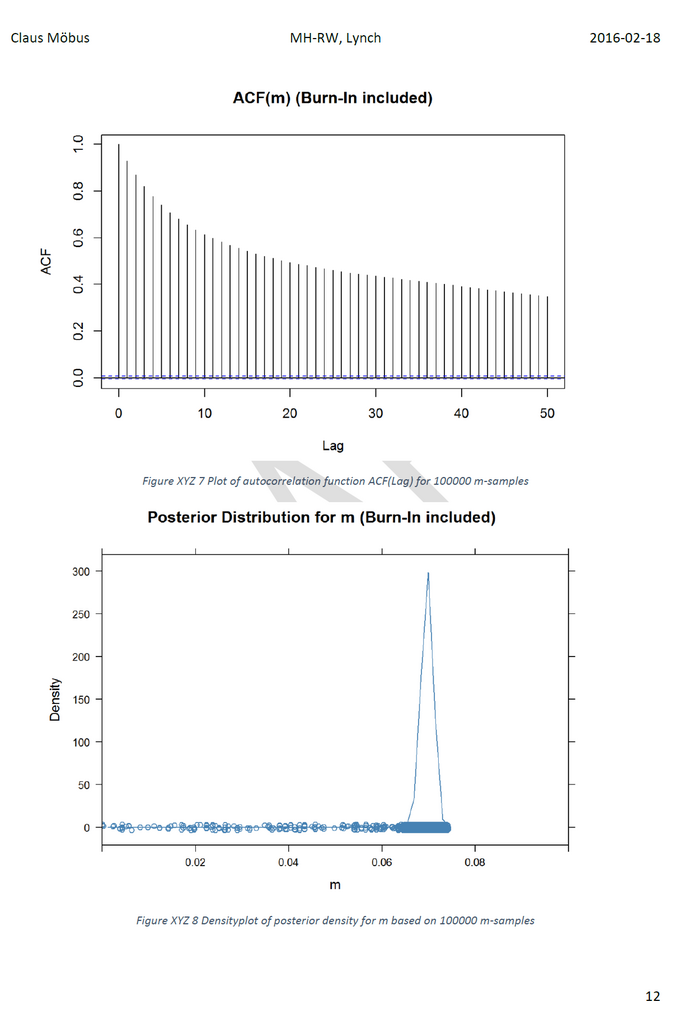

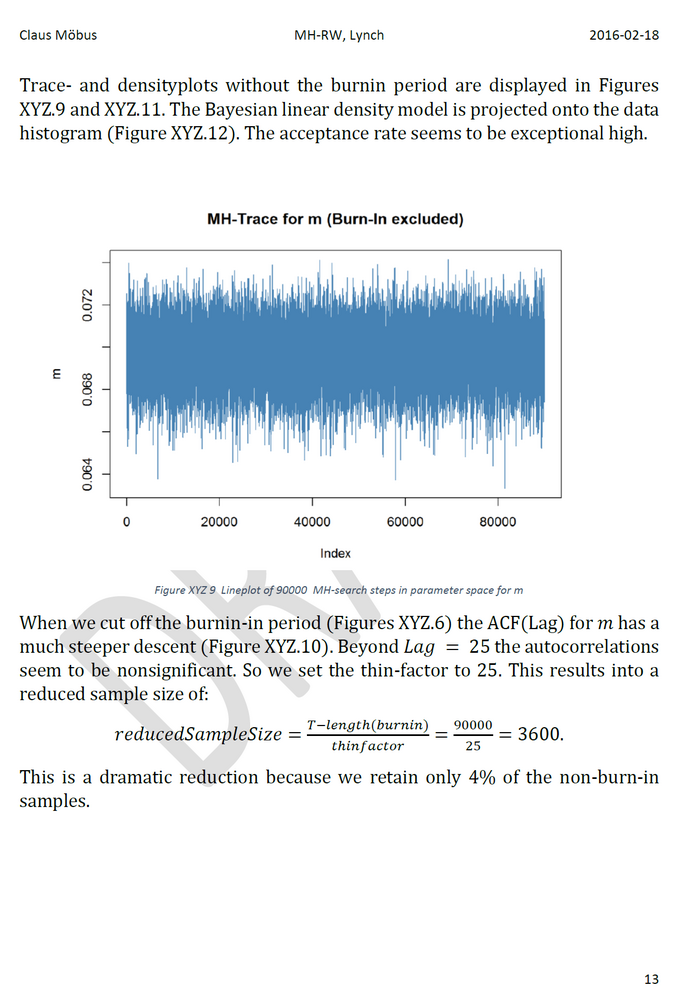

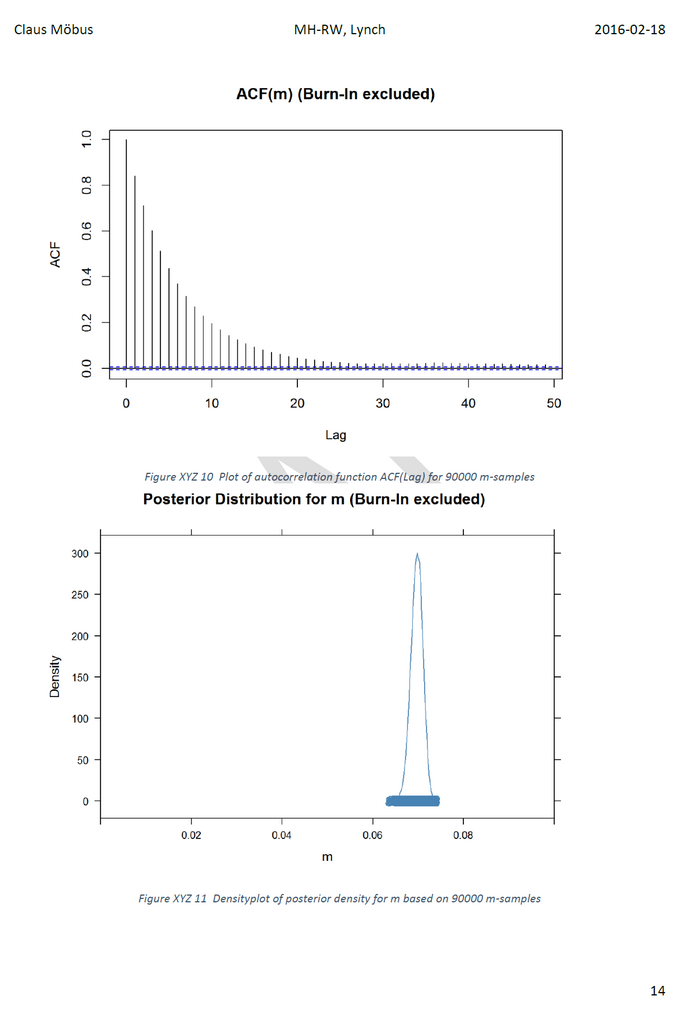

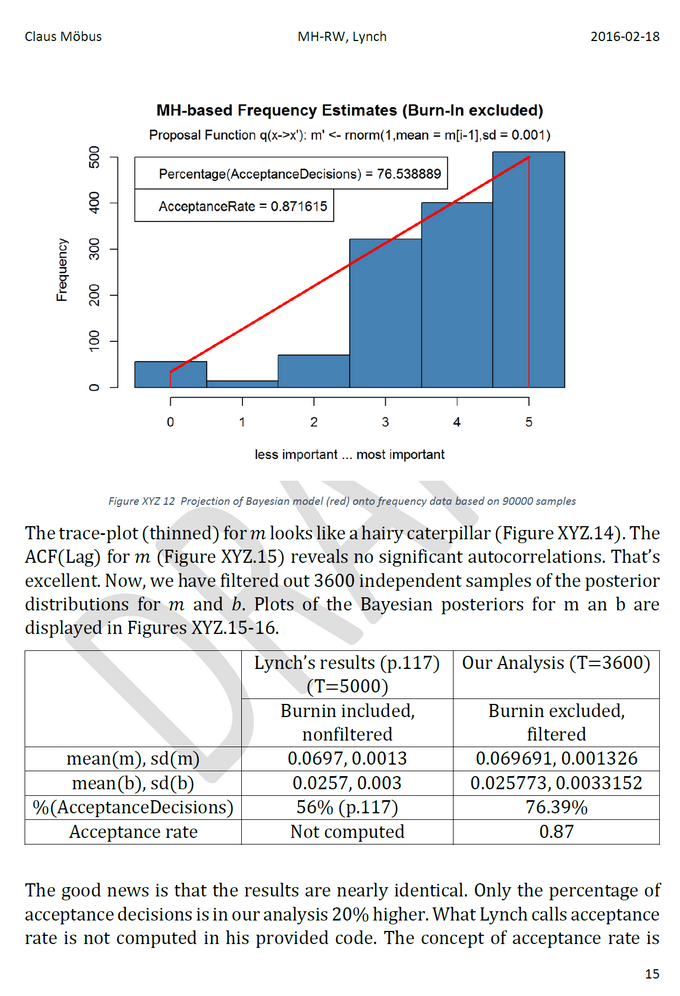

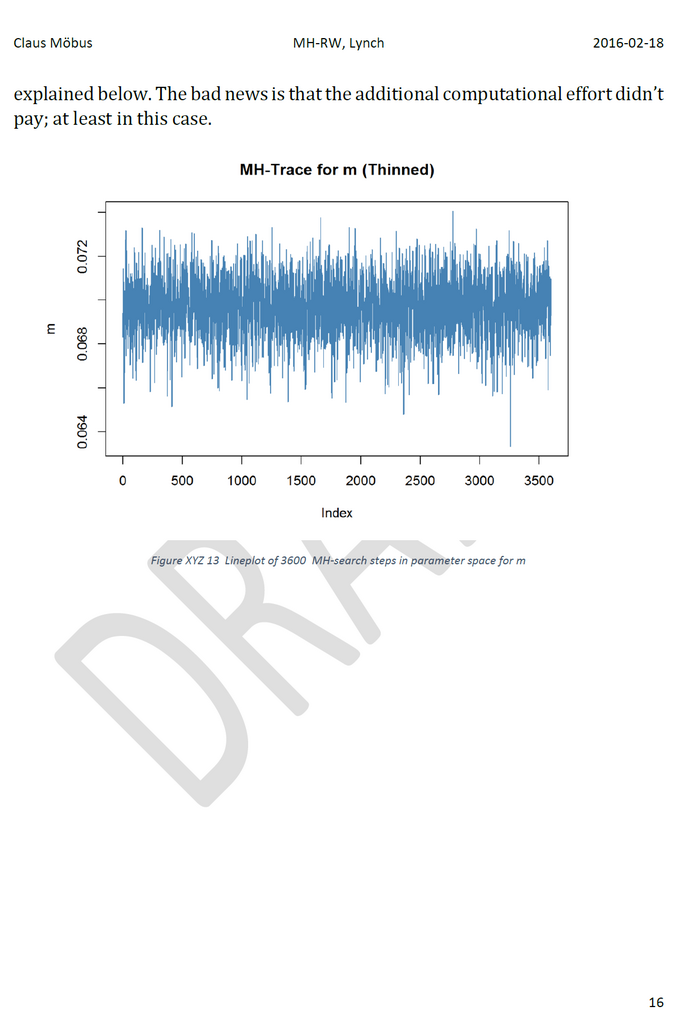

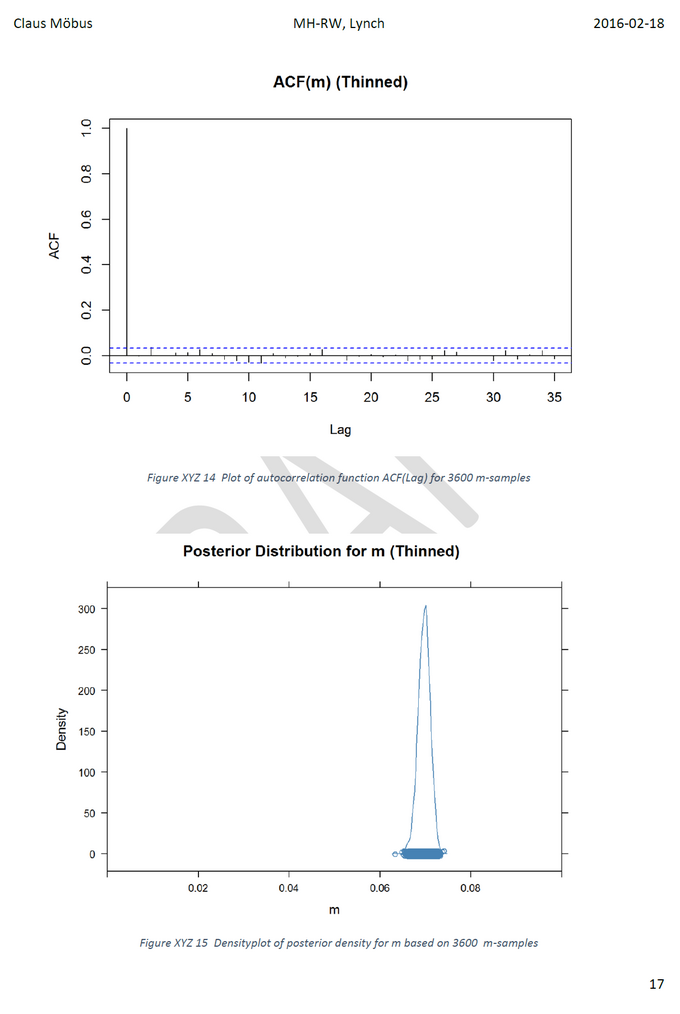

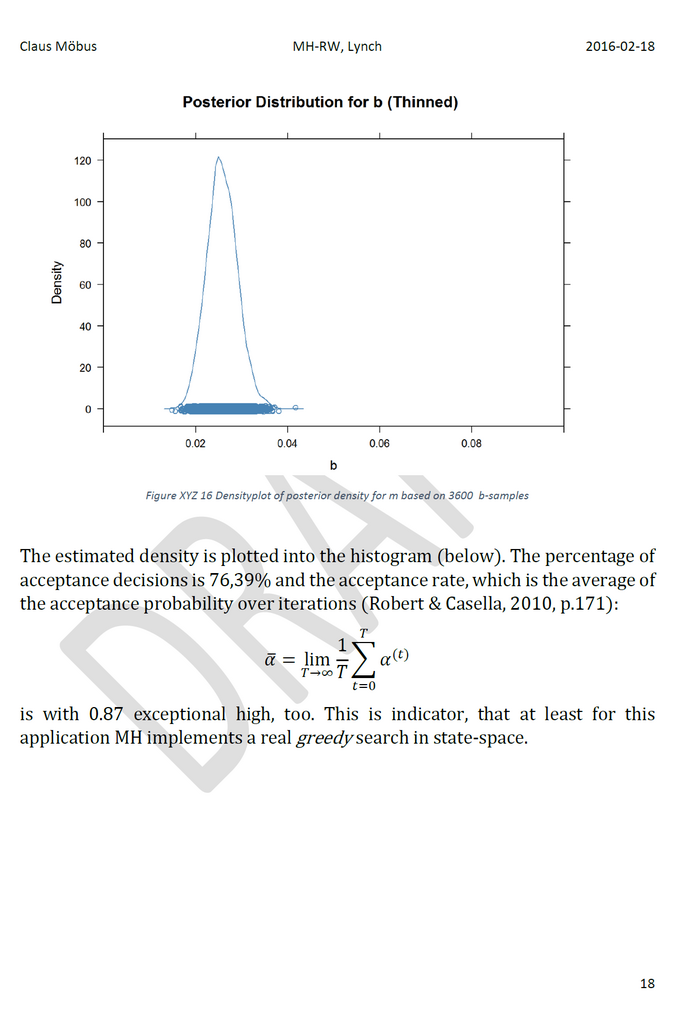

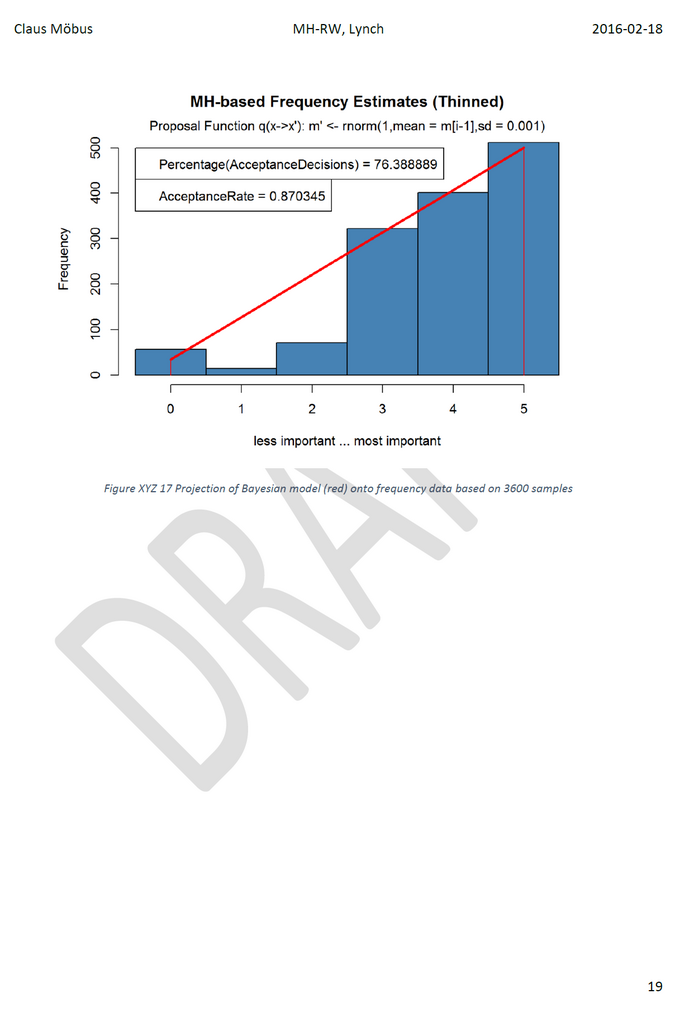

Initially the chain consisted of T=100000 sample steps. Then we recomputed the estimates after deleting a 10%-burn-in period. After that we determined the autocorrelation function ACF and the maximal lag (here 25) for the nonzero part of the ACF. This lag was used for thinning the posterior samples. The effective sample sizes shrunk to (4% of 90000) T=3600. Because the ACF did not reveal any significant autocorrelations these samples can be regarded as i.i.d. samples.

The estimates are very close to those reported by Lynch, though he used T=5000 samples without deleting the burn-in period and without thinning the time-series of MH-samples to eliminate autocorrelations. The percentage of acceptance decisions was 67.69 % and acceptance rate alpha (mean of the acceptance probabilities; Robert & Casella, 2010, p.171) was 0.87.

MacKay's Rule of Thumb: Lower Bound on Number of Iterations of a Metropolis Method

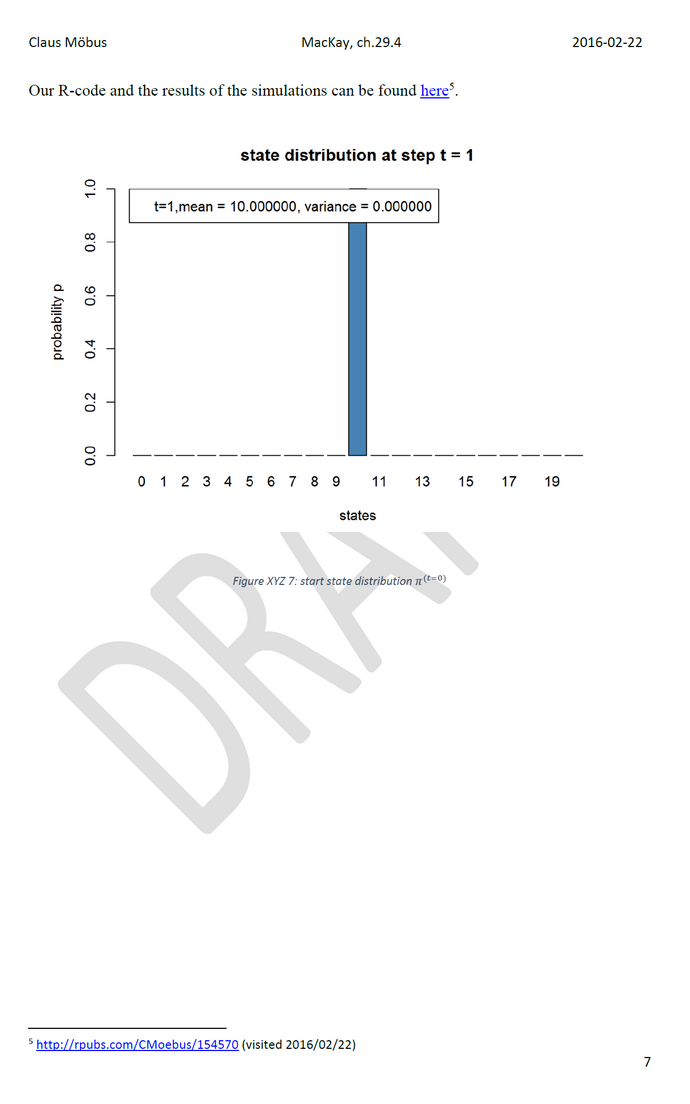

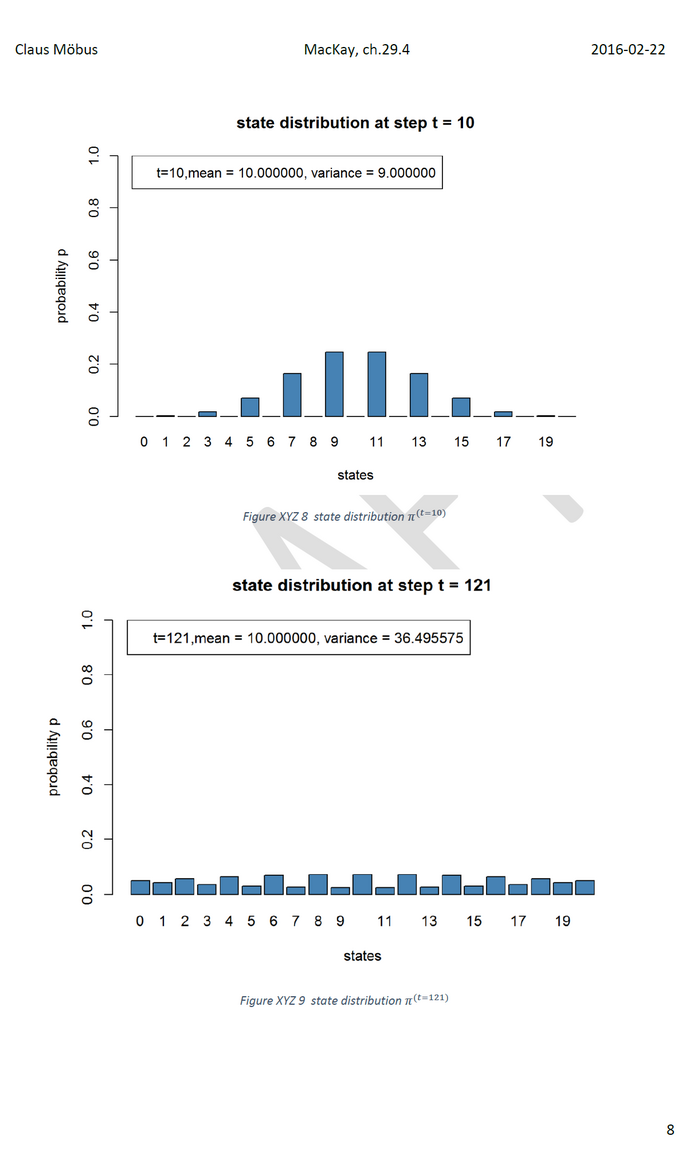

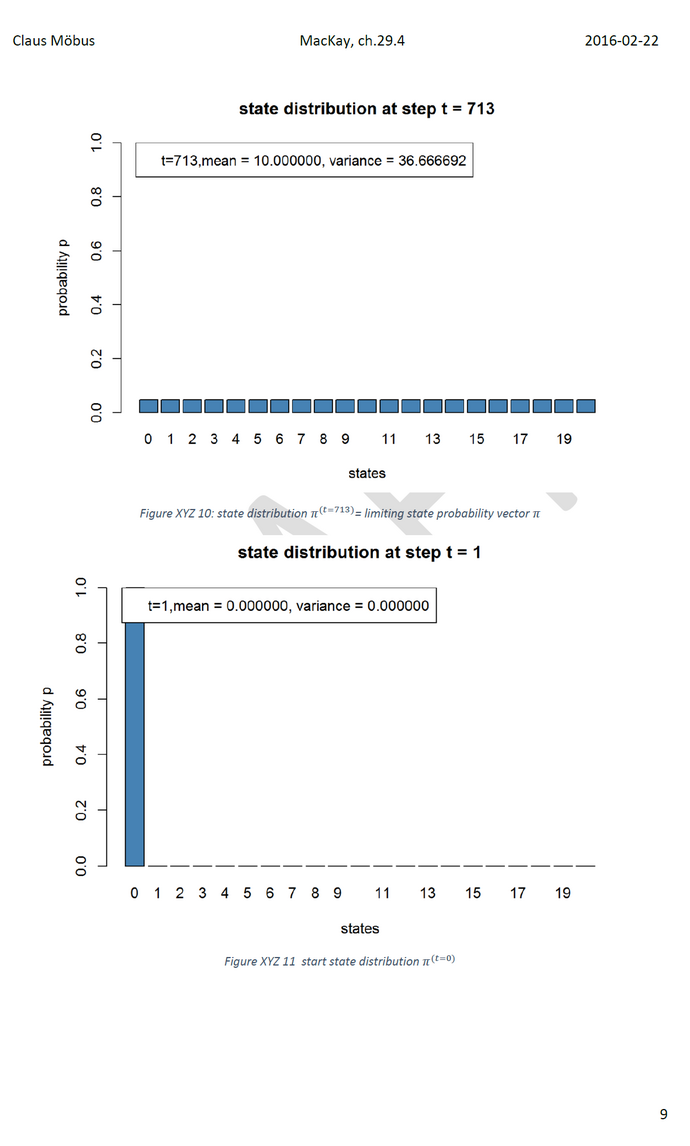

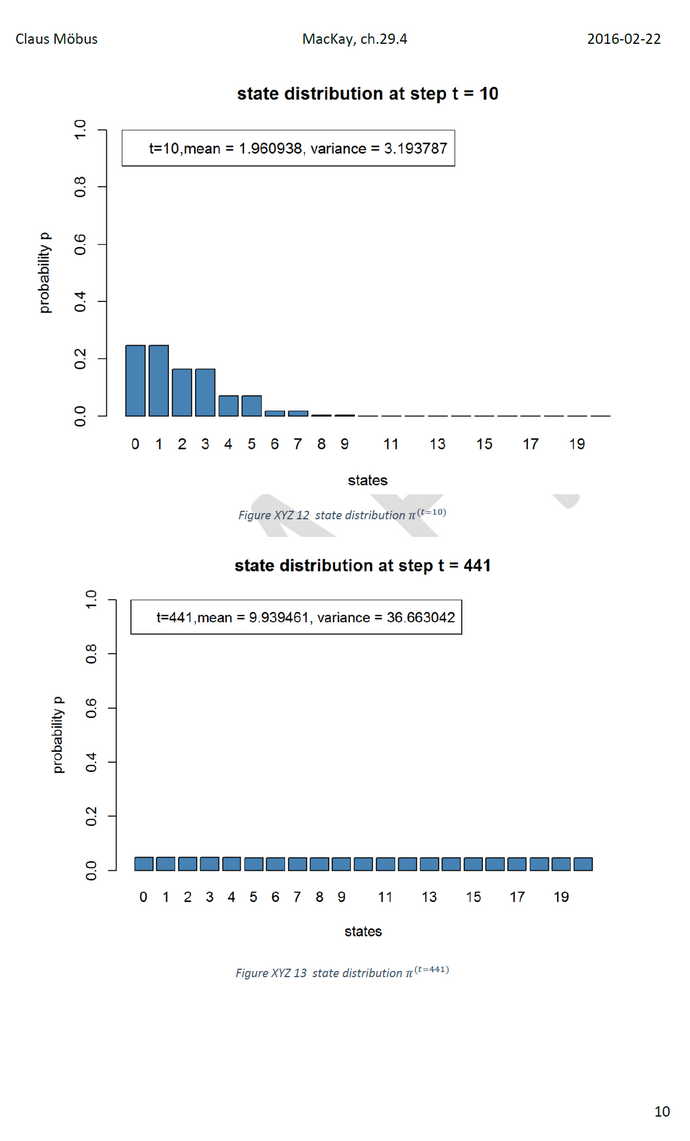

David MacKay's "Information Theory, Inference, and Learning Algorithms" (2003, p.367f) contains a chapter Demonstration of the Metropolis-Hastings (MH) method. This chapter deals with the important question how many steps should I run the MH simulation to get the next independent sample. The rule and its motivation is discussed below.