Bayesian Sumo Bots

Bayesian Sumo Bots

2017

Lessons Learnt and Conclusion

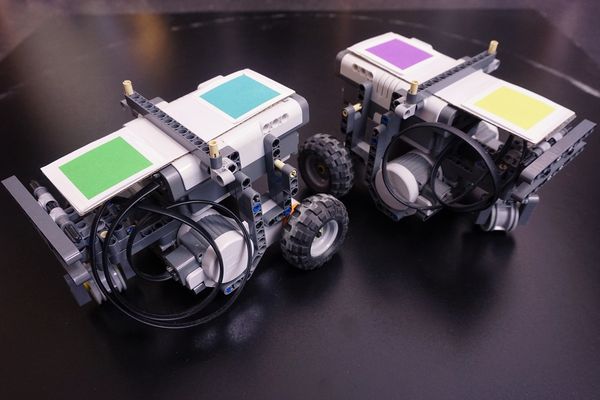

In this thesis Bayesian Sumo models were learnt from training data obtained from games tele-controlled by three human players. The hardware of each Sumo bot is built mainly from Lego components. The native software of the bots consists of two layers: 1) native leJOS, 2) Sumo models from the naive Bayesian classifier type.

System development ran into several problems (limited capacity of the NXT brick’s storage and I/O ports, low quality and sensitivity of sensors). Mainly the difficulties with the sensors lead to a reconstruction of the system. The sensors of the bots were replaced by virtual sensors obtaining their information from a camera in a birds view position.

Due to its model-simplicity real-time inferences were possible. However, managing the remote view of the bots current action probability distributions became increasingly difficult with changes and complexity of the models.

Due to time constraints model evaluation was hasty and informal. Human players could be ordered according to their game strength: A > B > C. The same rank order could be found for the Bayesian Sumo agents: A' > B' > C'. A more detailed and formal model evaluation according to Bayesian standards was postponed into the future.

The models seem to be too simple to describe something as complex as Sumo games. They, for example, lack a differentiation between maneuvers like attacking and evading or escaping. Furthermore, because of their reactive nature the robots lack foresight. They have no plan at hand and use only real-time sensor information.

A successful human player develops maneuvers, tactics, and strategies. This is seen in the video data from human tele-controlled games with, e.g., attack maneuvers in which one player navigates his robot to the side at the beginning of the round to then launch a side attack. In a different situation a specific evasion maneuver is performed, followed up by a counter attack. This makes it necessary to parse the training data into behavior hierarchies and to generate hierarchical Sumo models. This is something future research can elaborate on.

Lego Sumo Bots

Clips 1-4 show only fights between humans A and B. A tele-controls the green/blue bot and B the yellow/purple bot.

Clips 5-8 show fights between autonomous Bayesian agents A' (Green/Blue) and B' (Yellow/Purple). A' is a Bayesian clone of human A and B' of human B.

Clips 9-11 show further fights between A' and B'. Furthermore the dynamic posterior probability distributions of the action variables are displayed. These are updated after each perception cycle 20 times a second.

Clip 1: Each bot is tele-controlled by a human player: Green/Blue by A and Yellow/Purple by B

Both players attack and evade each other until eventually Yellow/Purple manages to catch Green/Blue from the side. Green/Blue is about to be pushed out, but at the last moment escapes by rapidly turning, making Yellow/Purple nearly drive itself out. Green/Blue turns around far enough to then push Yellow/Purple out backwards. The winner is human tele-controller A (Green/Blue).

Clip 2: Each bot is tele-controlled by a human player: Green/Blue by A and Yellow/Purple by B

Both players are attacking each other, without either seemingly having the upper hand. Suddenly, Green/Blue backs off while Yellow/Purple drives forward and misses. This opens an attack angle for Green/Blue who then acts quickly and pushes Yellow/Purple out of the ring. The winner is human tele-controller A (Green/Blue).

Clip 3: Each bot is tele-controlled by a human player: Green/Blue by A and Yellow/Purple by B

As the round begins, Yellow/Purple immediately makes a turn to the side to launch a side attack. Green/Blue doesn't realise this soon enough and gets caught by Yellow/Purple. Green/Blue can't escape and gets pushed out. The winner is human tele-controller B (Yellow/Purple).

Clip 4: Each bot is tele-controlled by a human player: green/blue by A and yellow/purple by B

Yellow/Purple seems to try to launch a side attack yet again, but doesn't execute it as well. This time Yellow/Purple gets caught and starts being pushed out. At the last moment, Yellow/Purple makes a turn on the spot, making Green/Blue nearly drive out of the ring. Yellow/Purple turns around completely and pushes Green/Blue out backwards. The winner is human tele-controller B (Yellow/Purple).

Clip 5: Each bot is controlled by a Bayesian agent: green/blue by A' and yellow/purple by B'

Green/Blue launches a direct attack, but misses Yellow/Purple as it drives around Green/Blue. Green/Blue tries to catch up by rotating, but rotates too far. This allows Yellow/Purple to catch the other from the side and push it out in a curve. The winner is Bayesian agent B' (Yellow/Purple).

Clip 6: Each bot is controlled by a Bayesian agent: green/blue by A' and yellow/purple by B'

The bots go back and forth before Green/Blue catches Yellow/Purple and starts pushing it out. At the last moment, Yellow/Purple rotates and escapes. Both seem to exit the ring at the same time. The winner is not clear.

Clip 7: Each bot is controlled by a Bayesian agent: green/blue by A' and yellow/purple by B'

Green/Blue manages to hook into Yellow/Purple and attacks it from the side. Yellow/Purple tries to escape by turning, but Green/Blue again manages to catch the other. Yellow/Purple again tries to escape, but Green/Blue keeps attacking until eventually pushing Yellow/Purple out. The winner is Bayesian agent A' (Green/Blue).

Clip 8: Each bot is controlled by a Bayesian agent: green/blue by A' and yellow/purple by B'

Yellow/Purple tries to circle around Green/Blue, while Green/Blue follows its rival and is able to hook into the front of it. Yellow/Purple is unable to escape and gets pushed out. Despite being close, the winner is Bayesian agent A' (Green/Blue).

Clip 9: Dynamic Posterior Action Distributions of Bayesian agent A' (A' controls green/blue bot)

LeftDown, Left, leftUp, Forward, RightUp, Right, RightDown, Backward, and Halt are Random Action variables. Their posterior probability distributions are computed after each perception cycle 20 times a second. Left and Right represent in-place turns. Forward, Backward, and Halt represent longitudinal forward, backward movements, and stop, respectively. The meaning of LeftUp, RightUp, LeftDown and RightDown are lateral turns forward and backward.

Clip 10: Dynamic Posterior Action Distributions of Bayesian agent A' (A' controls green/blue bot)

LeftDown, Left, leftUp, Forward, RightUp, Right, RightDown, Backward, and Halt are Random Action variables. Their posterior probability distributions are computed after each perception cycle 20 times a second. Left and Right represent in-place turns. Forward, Backward, and Halt represent longitudinal forward, backward movements, and stop, respectively. The meaning of LeftUp, RightUp, LeftDown and RightDown are lateral turns forward and backward.

Clip 11: Dynamic Posterior Action Distributions of Bayesian Agent A' (Green/Blue) with inactive Bayesian Agent B’ (Yellow/Purple) in Slow Motion

This clip in slow-motion should allow to follow both actions of the autonomus Blue/green bot and the according dynamic posterior Action probability distributions. The antagonist partner Yellow/Purple is inactive.

LeftDown, Left, leftUp, Forward, RightUp, Right, RightDown, Backward, and Halt are Random Action variables. Their posterior probability distributions are computed after each perception cycle 20 times a second. Left and Right represent in-place turns. Forward, Backward, and Halt represent longitudinal forward, backward movements, and stop, respectively. The meaning of LeftUp, RightUp, LeftDown and RightDown are lateral turns forward and backward.