HERO

Kontakt

- Bitte kontaktieren Sie uns bevorzugt per E-Mail an eine der unten angegebenen Adressen. Vorrangig bitte an unsere Supportadresse .

- Wünschen Sie telefonischen Kontakt, so rufen Sie uns gerne unter den unten stehenden Nummern an, oder vereinbaren Sie einen Gesprächstermin via Mail.

- Unsere typischen Bürozeiten sind zwischen 9:00 und 17:30 Uhr. Falls wir einmal nicht anzutreffen sind, erreichen Sie uns immer per Mail

HPC-Support

Anschrift

HERO

HERO (High-End Computing Resource Oldenburg)

HERO, funded by the Deutsche Forschungsgemeinschaft (DFG) and the Ministry of Science and Culture (MWK) of the State of Lower Saxony, is a multi-purpose cluster designed to meet the needs of compute-intensive and data-driven research projects in the main areas of

- Quantum Chemistry and Quantum Dynamics,

- Theoretical Physics,

- The Neurosciences (including Hearing Research),

- Oceanic and Marine Research

- Biodiversity, and

- Computer Science

Like its sister cluster FLOW, HERO is operated by the IT Services of the University of Oldenburg. The system is used by more than 20 research groups from the Faculty of Mathematics and Science, and a couple of research groups from the Department of Computing Science of the School of Computing Science, Business Administration, Economics and Law.

Overview of Hardware

- 150 Compute Nodes (1800 CPU cores, 19.1 TFlop/s theoretical peak, 4.1 TB main memory)

- 130 "standard" nodes

- IBM System x iDataPlex dx360 M3 server (12 cores, 24 GB DDR3 RAM, SATA-II 1TB HDD)

- Intel Xeon Processor X5650 ("Westmere-EP", 6 Cores, 2.66 GHz, 12 MB Cache, Max. Mem. Speed 1333 MHz, QPI 6.4 GT/s, TDP 95 W)

- 20 "big" nodes

- IBM System x iDataPlex dx360 M3 server (12 cores, 48 GB DDR3 RAM) with a storage expansion unit (8 SAS 300 GB HDDs, 15k RPM, 6 Gbps, configured as RAID-0 with a gross capacity of 2.4 TB)

- CPU is the same as in "standard" nodes

- The "big" nodes are meant for jobs that require large amounts of memory or a high I/O-performance (for reading and writing local scratch files), or both.

- 130 "standard" nodes

- Shared-Memory Component (120 cores, 1.3 TFlop/s theoretical peak, 640 GB main memory)

- SGI Altix UV 100 system (20 sockets, 640 GB DDR3 RAM, SAS 300GB system disk) with an additional storage unit (20 SAS 600GB HDDs, 15k RPM, 6Gbps, configured as RAID-0 with a gross capacity of 12 TB)

- Intel Xeon Processor X7542 ("Nehalem-EX", 6 Cores, 2.66 GHz, 18 MB Cache, Max. Mem. Speed 1066 MHz, QPI 5.86 GT/s, TDP 130 W)

- Proprietary NUMAlink 5 interconnect, which beats InfiniBand with respect to both latency and bandwidth

- The Altix UV 100 system is meant for jobs with extreme memory requirements or for very communication-intensive jobs (or for jobs with a mixture of both requirements). Since some of these jobs are also very I/O-intensive, the system is equipped with a high performance RAID (Level-0) for fast read and write access to local scratch files.

- Part of the components of the older GOLEM beowolf cluster, which has been succeeded by HERO, have been integrated into the latter. This adds another 57 compute nodes (AMD Opteron Dual-Core and Quadcore CPUs, altogether 288 cores, 600 GB main memory, about 1.9 TFlops theoretical peak) to HERO.

- Management and Login Nodes

- 2 master nodes in an active/passive high-availability (HA) configuration

- IBM System x3550 M3 server (8 cores, 24 GB DDR3 RAM, disks: 4 SAS 300GB HDDs, 10k RPM, 6Gbps, configured as RAID-10)

- Intel Xeon Processor E5520 ("Westmere-EP", 4 Cores, 2.4 GHz, 12 MB Cache, Max. Mem. Speed 1066 MHz, QPI 5.86 GT/s, TDP 80 W)

- The master nodes are shared between HERO and its sister cluster, FLOW, and run all vital cluster services (node provisioning, DHCP, DNS, LDAP, NFS, Job Management System, etc.). They also provide monitoring functions for both clusters (with automated alerting). Monitoring includes hardware components (health states of all servers, temperature, power consumption, etc.) as well as basic cluster services (with automated restart if a service has died).

- 2 login nodes for user access to the system, software development (programming environment), and job submission and control

- IBM System x3550 M3 server (8 cores, 24 GB DDR3 RAM, disks: 2 SAS 146GB HDDs, 10k RPM, 6Gpbs, configured as RAID-1)

- CPU is the same as in master nodes

- 2 master nodes in an active/passive high-availability (HA) configuration

- Internal networks

- Node Interconnect: Gigabit Ethernet ("MPI network&rdquo) with a 10Gb Ethernet backbone network (non-blocking islands consisting of 96 nodes)

- Second, physically separate Gigabit Ethernet ("base network") for vital cluster services (node provisioning, DHCP, DNS, LDAP, NFS, Job Management System, etc.)

- 10Gb Ethernet backbone network connecting the management and login nodes, the storage system, and the Gigabit Ethernet (MPI and base network) leaf switches

- Dedicated IPMI network for hardware monitoring and control, including access to VGA console (KVM functionality), allowing full remote management of the cluster

- Storage System

- Enterprise-class scalable NAS cluster (manufacturer: EMC Isilon), 180 TB raw capacity, 130 TB net capacity (for the redundancy level chosen), IOPS NFS/CIFS (SpecSFS 2008) 18075 / 32279, InfiniBand backend network, two Gigabit Ethernet and two 10Gb Ethernet frontend ports per storage node.

- The storage system is shared between HERO and its sister cluster, FLOW. Disk space is allocated to the two clusters depending on how much of the hardware of the storage system was paid out of the FLOW and HERO project funds, respectively.

System Software and Middleware

- All cluster nodes are running Scientific Linux

- Cluster management: Bright Cluster Manager

- Commercial compilers, debuggers and profilers, and performance libraries: Intel Cluster Studio, PGI

- Workload management: Sun Grid Engine (SGE)

Selected applications running on HERO

(Due to licensing, some applications are only accessible for specific users or research groups.)

- Quantum Chemistry Packages: Gaussian 09, MOLCAS, MOLPRO, VASP

- LEDA - a C++ class library for efficient data types and algorithms

- MATLAB, including the Parallel Computing Toolbox

- FVCOM - The Unstructured Grid Finite Volume Coastal Ocean Model

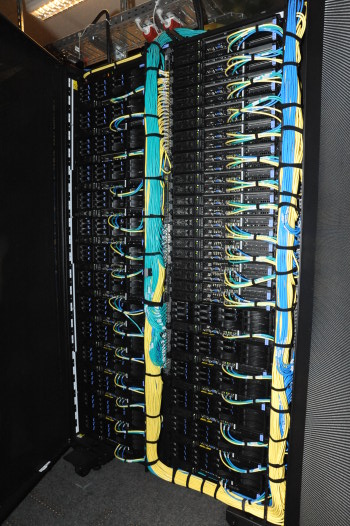

Pictures