Kontakt

Projekt B1 - Das immersive Hörgerät

Mit aktueller Hörgerätetechnologie wird der größte Sprachkommunikationsvorteil bei ungünstigen Hörbedingungen typischerweise durch Richtungsfilterung erreicht. Um diesen Vorteil zu erreichen, müssen Hörgerätenutzer jedoch ihr natürliches Bewegungsverhalten ändern und verlieren das Gefühl des „Eintauchens“ (Immersion) in die Geräuschumgebung.

Ziel des Projekts ist es daher, ein immersives Hörgerät zu entwickeln, das den großen Vorteil von Richtungsfiltern ohne störenden Einfluss auf Verhalten und Immersion bietet, sowie den Hörwunsch des Nutzers in die Signalverarbeitung einbezieht.

Dazu werden Methoden aus der auditorischen Szenenananalyse mit einer automatischen Schätzung des Hörwunsches kombiniert.

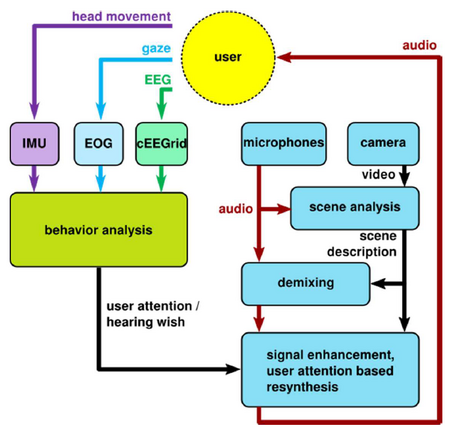

Bild 1: Schematisches Flussdiagramm des immersiven Hörgeräts. Kern des Konzeptes ist eine Kombination aus akustischer und Bildanalyse (Szenenanalyse-Block) mit einer Analyse von Sensorsignalen (Verhaltensanalyse-Block) zur Erkennung des Hörwunsches, d.h. der bei Interesse attendierten Quelle, und zur optimalen Verbesserung der attendierten Quelle (Signalverstärkungsblock).

Bild 2: Eine typische Evaluationsumgebung

Publikationen

2025

- Hohmann V, Kollmeier B, Grimm G (2025) Hörstörungen und Hörgeräte. In: Weinzierl S (eds) Handbuch der Audiotechnik. Springer Vieweg, Berlin, Heidelberg. Hardcover ISBN978-3-662-60368-0. 20 pages

https://link.springer.com/book/9783662603680

Preprint book chapter from 2021 available at DOI: 10.1007/978-3-662-60357-4_7-1

Preprint book from 2021 at DOI: 10.1007/978-3-662-60357-4 - Roßbach J, Westhausen NL, Kayser H, Meyer BT (2025) Non-intrusive binaural speech recognition prediction for hearing aid processing. Speech Communication 170: 103202. DOI:10.1016/j.specom.2025.103202

2024

- Gerken M, Hohmann V, Grimm G (2024) Comparison of 2D and 3D multichannel audio rendering methods for hearing research applications using technical and perceptual measures. Acta Acustica 8: 17, 1-17. DOI: 10.1051/aacus/2024009

- Grimm G (2024) Interactive low delay music and speech communication via network connections (OVBOX). Acta Acoustica 8: 18, 1–7. DOI: 10.1051/aacus/2024011

- Grimm G, Daeglau M, Hohmann V, Debener S (2024) EEG hyperscanning in the Internet of Sounds: low-delay real-time multi-modal transmission using the OVBOX. 2024 IEEE 5th International Symposium on the Internet of Sounds (IS2), Erlangen, Germany, pp. 1-8. DOI: 10.1109/IS262782.2024.10704205

- Le Rhun L, Llorach G, Delmas T, Suied C, Arnal LH, Lazard DS (2024) A standardised test to evaluate audio-visual speech intelligibility in French, Heliyon 10, e24750 (12 pages). DOI: 10.1016/j.heliyon.2024.e24750

- Llorach G, Oetting D, Vormann M, Meis M, Hohmann V (2024) Vehicle noise: comparison of loudness ratings in the field and the laboratory. International Journal of Audiology 63(2), 117-126. (Published online: 13 Dec 2022). DOI: 10.1080/14992027.2022.2147867

2023

- Grimm G, Kayser H, Kothe A, Hohmann V (2023) Evaluation of behavior-controlled hearing devices in the lab using interactive turn-taking conversations. 10th Convention of the European Acoustics Association (Forum Acusticum), Turin, Italy, 11.-15.09.2023, pp. 2773-2777. DOI: 10.61782/fa.2023.0127

- Grimm G, Kothe A, Hohmann V (2023) Effect of head motion animation on immersion and conversational benefit in turn-taking conversations via telepresence in audiovisual virtual environments. 10th Convention of the European Acoustics Association (Forum Acusticum), Turin, Italy, 11.-15.09.2023, pp. 433-435. DOI: 10.61782/fa.2023.0126

- Hohmann V (2023) The future of hearing aid technology. Z Gerontol Geriat 56: 283–289.

DOI: 10.1007/s00391-023-02179-y - Picinali L, Grimm G, Hioka Y, Kearney G, Johnston D, Jin C, Simon LSR, Wuthrich H. Mihocic M, Majdak P, Vickers D (2023) VR/AR and hearing research: current examples and future challenges. 10th Convention of the European Acoustics Association (Forum Acusticum), 11.-15.09.2023, Turin, Italy, pp 1393-1400. DOI: 10.61782/fa.2023.0322

2022

- Heeren J, Nuesse T, Latzel M, Holube I, Hohmann V, Wagener KC, Schulte M (2022) The concurrent OLSA test: a method fo speech recognition in multi-talker situations at fixed SNR. Trends Hear 26: 23312165221108257, 12 pages. DOI: 10.1177/23312165221108257

- Hendrikse MME, Eichler T, Hohmann V, Grimm G (2022) Self-motion with hearing impairment and (directional) hearing aids. Trends Hear 26: 23312165221078707, 15 pages. DOI: 10.1177/23312165221078707

- Llorach G, Kirschner F, Grimm G, Zokoll MA, Wagener KC, Hohmann V (2022) Development and evaluation of video recordings for the OLSAmatrix sentence test. Int. J. Audiol. 61(4): 311-321. DOI: 10.1080/14992027.2021.1930205

Video recordings for the female German Matrix Sentence Test (OLSA) can be found under DOI: 10.5281/zenodo.3673062. - Sönnichsen R, Llorach To G, Hochmuth S, Hohmann V, Radeloff A (2022) How face masks interfere with speech understanding of normal-hearing individuals: vision makes the difference. Otol Neurotol 43: 282-288. DOI: 10.1097/MAO.0000000000003458

- Sönnichsen R, Llorach To G, Hohmann V, Hochmuth S, Radeloff A (2022) Challenging times for cochlear implant users – effect of face masks on audiovisual speech understanding during the COVID-19 pandemic. Trends in Hearing 26, 9 pages. DOI: 10.1177/233121652211343788

- van de Par S, Ewert SD, Hladek L, Kirsch C, Schütze J, Llorca-Bofí J, Grimm G, Hendrikse MME, Kollmeier B, Seeber BU (2022) Auditory-visual scenes for hearing research. Acta Acustica 6:55, 14 pages. DOI: 10.1051/aacus/2022032

2021

- Hartwig M, Hohmann V, Grimm G (2021) Speaking with avatars - influence of social interaction on movement behavior in interactive hearing experiments. IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), pp 94-98. DOI: 10.1109/VRW52623.2021.00025

2020

- Grimm G, Hendrikse MME, Hohmann V (2020) Review of self-motion in the context of hearing and hearing device research. Ear Hear 41: 48S-55S. DOI: 10.1097/AUD.0000000000000940

- Hendrikse MME, Grimm G, Hohmann V (2020) Evaluation of the influence of head movement on hearing aid algorithm performance using acoustic simulations. Trends Hear 24: 2331216520916682, 1-20. DOI: 10.1177/2331216520916682. Software is available at DOI: 10.5281/zenodo.3905920

- Hohmann V, Paluch R, Krueger M, Meis M, Grimm G (2020) The virtual reality lab: realization and application of virtual sound environments. Ear Hear 41 (Suppl 1): 31S–38S. DOI: 10.1097/AUD.0000000000000945

- Keidser G, Naylor G, Brungart DS, Caduff A, Campos J, Carlile S, Carpenter MG, Grimm G, Hohmann V, Holube I, Launer S, Lunner T, Mehra R, Rapport F, Slaney M, Smeds K (2020) The Quest for Ecological Validity in Hearing Science: What It Is, Why It Matters, and How to Advance It. Ear Hear 41 (Suppl 1): 5S-19S. DOI: 10.1097/AUD.0000000000000944

2019

- Grimm G, Luberadzka J, Hohmann V (2019) A toolbox for rendering virtual acoustic environments in the context of audiology. Acta Acust United Acust 105: 566 –578. DOI: 10.3813/AAA.919337

- Hendrikse MME, Llorach G, Hohmann V, Grimm G (2019) Movement and gaze behavior in virtual audiovisual listening environments resembling everyday life. Trends Hear 23: 2331216519872362, 1-29. DOI: 10.1177/2331216519872362.

The database of movement behavior and EEG can be found under DOI: 10.5281/zenodo.1434090, the virtual environments are published under DOI: 10.5281/zenodo.3724085. - Llorach G, Vormann M, Hohmann V, Oetting D, Fitschen C, Meis M, Krueger M, Schulte M (2019) Vehicle noise: loudness ratings, loudness models, and future experiments with audiovisual immersive simulations. In INTER-NOISE and NOISE-CON Congress and Conference Proceedings 259(3), 6752-6759. DOI: 10.5281/zenodo.4276091. Data set available at DOI: 10.5281/zenodo.6519277. Videos available at DOI: 10.5281/zenodo.3822311

- Paluch R, Krueger M, Hendrikse MME, Grimm G, Hohmann V, Meis M (2019) Towards plausibility of audiovisual simulations in the laboratory: methods and first results from subjects with normal hearing or with hearing impairment. Zeitschrift für Audiologie/Audiological Acoustics. Z Audiol 2019, 58 (1), 6-15. DOI: 10.4126/FRL01-006412919